Test-Driven Development of AI Coding Agents

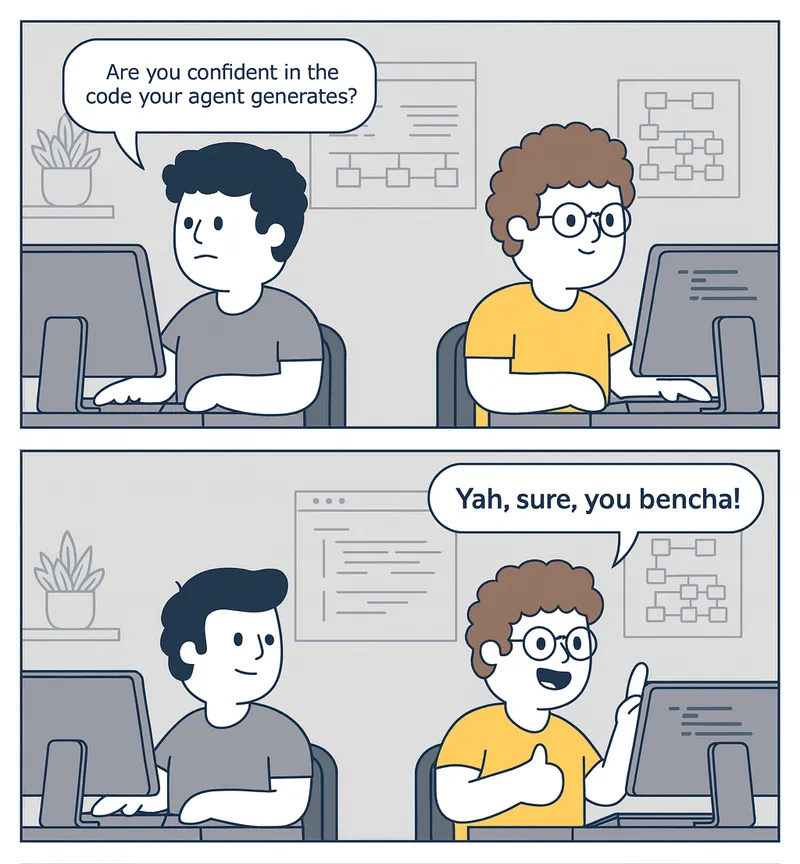

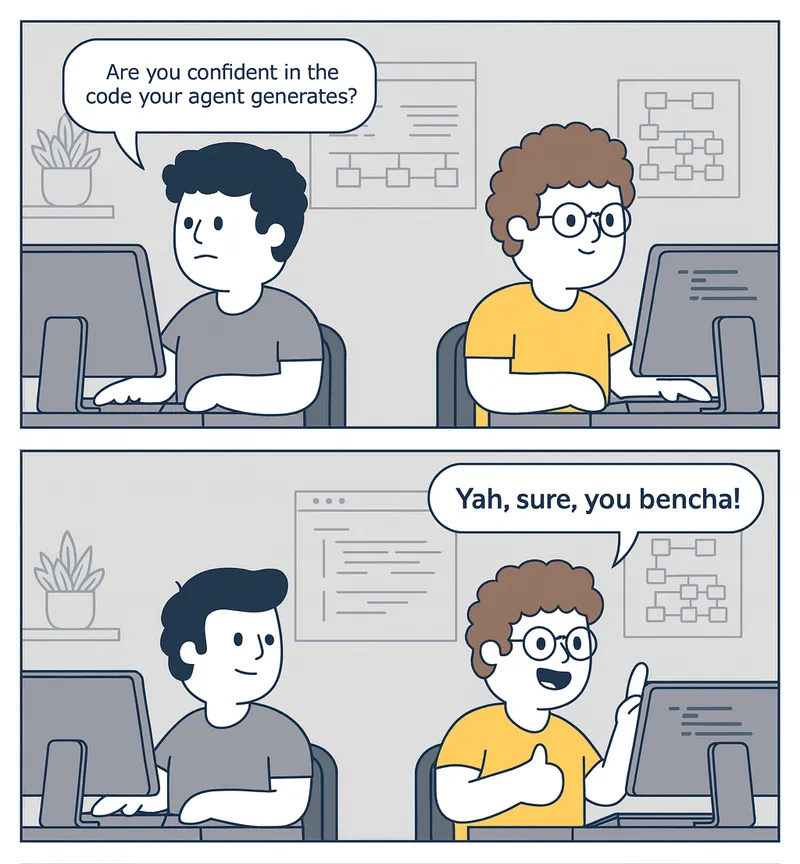

Stop evaluating with vibes. youBencha brings TDD discipline to AI agent development—define expectations first, iterate to green, and prevent regressions with objective, reproducible metrics.

Stop evaluating with vibes. youBencha brings TDD discipline to AI agent development—define expectations first, iterate to green, and prevent regressions with objective, reproducible metrics.

Apply TDD principles to your AI agent development

Install globally via npm to access the yb CLI anywhere.

npm install -g youbenchaRun yb init to generate a starter configuration, or create your own. Define expectations before writing your agent—this forces you to clearly specify what success looks like, the core TDD principle.

# Quick start: yb init creates suite.yaml with helpful comments

# Or define your own test case:

name: "Add README Comment"

description: "Agent should add a helpful comment to the README"

repo: https://github.com/youbencha/hello-world.git

branch: main

agent:

type: copilot-cli

agent_name: readme-commenter # Will create this agent next

config:

prompt: "Add a comment at the top of README.md explaining this is a test repository"

evaluators:

- name: git-diff

- name: agentic-judge

config:

type: copilot-cli

assertions:

readme_modified: "README.md was modified. Score 1 if true, 0 if false."

has_comment: "A comment was added. Score 1 if true, 0 if false."Now create a named agent in .github/agents/ with specific instructions. Named agents let you version-control your agent's behavior and reuse it across test cases.

# .github/agents/readme-commenter.md

---

name: readme-commenter

description: Agent that adds helpful comments to README files

---

You are a documentation specialist. When asked to modify a README:

1. Read the existing content carefully

2. Add clear, helpful comments that explain the purpose

3. Preserve existing formatting and structure

4. Keep changes minimal and focusedExecute your test case and review the results of the evaluators. Which assertions passed? Which failed?

yb run -c suite.yamlIf assertions fail, improve your approach: clarify prompts, add context, or constrain scope. Then run again. This is the TDD loop—iterate until your assertions pass.

# Refine prompt, run again

yb run -c suite.yamlEssential commands and links for working with youBencha

yb init Create a starter configuration file with helpful comments

yb init [--force] yb run Execute an evaluation suite against an AI agent

yb run -c suite.yaml [--delete-workspace] yb report Generate human-readable report from evaluation results

yb report --from results.json [--format markdown|json] yb validate Validate suite configuration without running evaluation

yb validate -c suite.yaml [-v] yb list List available built-in evaluators and their descriptions

yb list TDD principles applied to AI agent evaluation

Apply the classic TDD cycle to agents: define assertions first, iterate until they pass, then refine context and expand coverage. Reproducible, objective, and scalable.

Move beyond 'vibes-based' evaluation. Define clear success criteria with binary, graded, or quantitative assertions that measure task completion, code quality, and instruction following.

Pluggable adapter system works with any AI agent. Start with GitHub Copilot CLI today, switch to other agents tomorrow. No vendor lock-in.

Safe, repeatable evaluations in isolated workspaces. Pin to specific commits, branches, or repositories. Complete execution logs for debugging agent failures.

When you change prompts or configurations, your test suite catches regressions. Build baseline results, compare new runs, and detect quality degradation automatically.

TDD for agents in practice

Define assertions first, run your agent, analyze results. When assertions fail, clarify prompts, add context, or constrain scope. Iterate until green—just like TDD for code.

# The TDD iteration loop

yb run -c testcase.yaml # Run agent

# Analyze results, adjust prompt

yb run -c testcase.yaml # Run again until greenBinary assertions for yes/no checks, graded assertions for partial credit, and quantitative assertions for measurable criteria. Cover task completion, code quality, best practices, and documentation.

assertions:

task_completed: "Feature implemented. Score 1/0.5/0."

documentation_quality: "Score 1.0 if full JSDoc, 0.7 if partial."

tests_pass: "All tests pass. Score 1 if true, 0 if false."True agent quality means performing well across varied scenarios. Test on different repositories, branches, and commits. Compare against 'gold standard' reference implementations with expected-diff.

# Pin to exact commit for reproducibility

repo: https://github.com/example/project.git

branch: main

commit: abc123def456Build a test suite that protects against regressions. When you change prompts or agent configurations, run your suite to ensure previous capabilities still work. Store baseline results and compare.

# Run regression suite after changes

for config in regression-suite/*.yaml; do

yb run -c "$config"

doneIntegrate youBencha into GitHub Actions or your CI pipeline. Catch agent regressions automatically before deployment. Use post-evaluation hooks for Slack, Teams, or database export.

# GitHub Actions integration

- run: npm install -g youbencha

- run: yb run -c suite.yaml

- run: |

FAILED=$(jq '.summary.failed' results.json)

[ "$FAILED" -eq 0 ] || exit 1